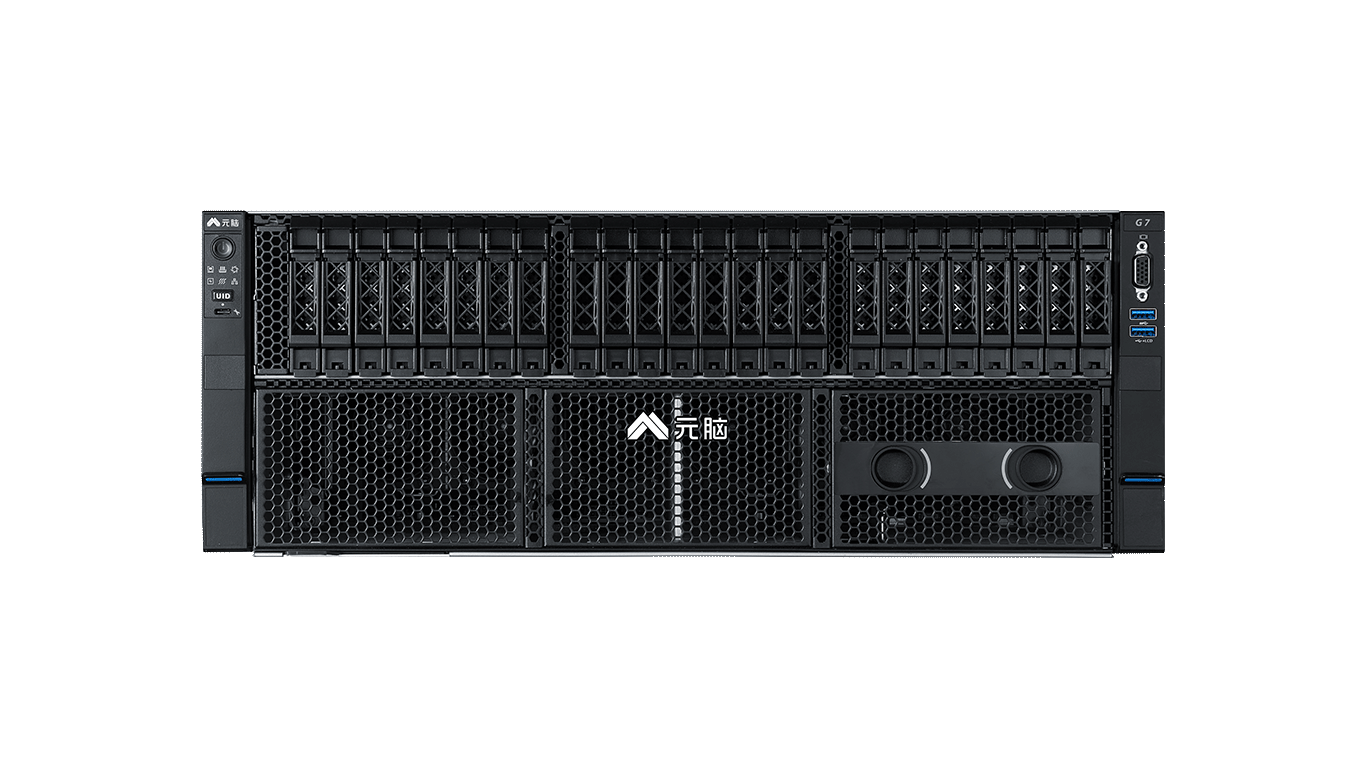

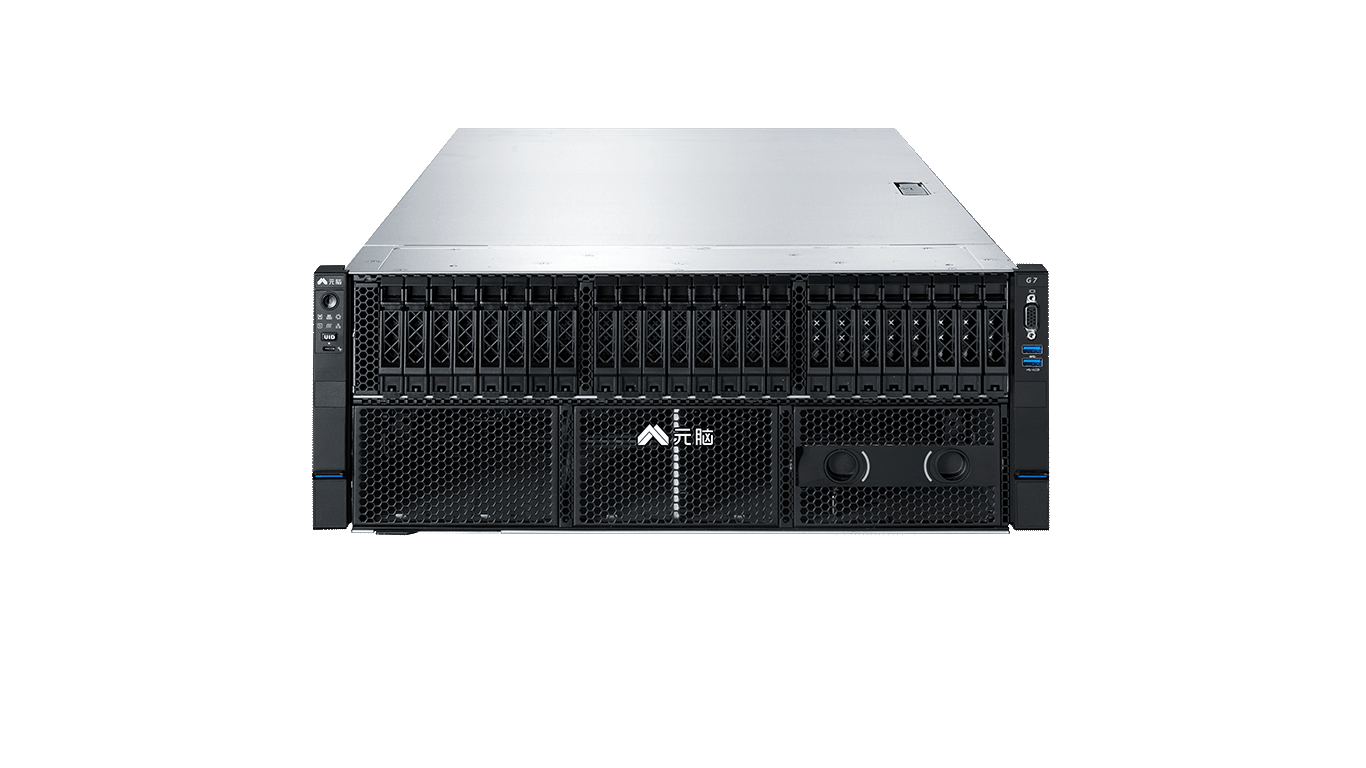

InspurNF5688G8 Artificial Intelligence AI Learning GPUsserver (computer) Wave Server Training Server Reasoning Server hyperconvergenceServers GPUs

The metabrain R1 inference server NF5688G8 is based on Wave Information's new-generation AI hyper-converged architecture platform for hyperscaledata centerThe powerful performance, extreme scaling AI server with the strongest arithmetic density of 6U space carries 1 Hopper architecture HGX-8GPU module, and the system supports 4.0TbpsreticulationBandwidth to support DeepSeek 671B large models deployed on a single machine with FP8 native accuracy. Equipped with 2 AMD 5th generation EPYC processors (Max cTDP 500W), up to 3TB of system memory, 128TB NVMe high-speedstockpileIt supports up to 12 PCIe Gen5 x16 expansion slots, and can flexibly support OCP 3.0, CX7, and a variety of smart NICs, etc., to build the strongest AI arithmetic platform oriented to the scenarios of mega-model training, meta-universe, natural language comprehension, recommendation, and AIGC.

powerful performance

Stand-alone video memory capacity of 1128G, realizing the complete deployment of DeepSeek 671B model

2 AMD 5th Generation EPYC processors

Integrate Transformer engine to dramatically accelerate GPT large model training speed

Ultimate Energy Efficiency

Extreme optimization of heat dissipation performance, air duct decoupling design to enhance the 20% system energy efficiency ratio

12V and 54V N+N redundant power supply separation design to reduce power conversion losses

Intelligent layered adjustment of heat dissipation to reduce power consumption and noise

Leadership Architecture

Full PCIe 5.0 high-speed links within nodes, 4X increase in CPU to GPU bandwidth

High-speed interconnect expansion between nodes, 4.0Tbps non-blocking bandwidth IB/RoCE networking

Cluster-level optimized architecture design with GPU: Compute IB: Storage IB = 8:8:2

pluralistic and compatible

Fully modular design, one machine with multiple cores, flexible configuration to support local andCloud Deployment

Accelerating large model training and inference

Leading support for diverse SuperPod solutions for rich scenarios such as AIGC, AI4Science and meta-universes

technical specification

model number model number |  nf5688-a8-a0-r0-00 nf5688-a8-a0-r0-00 |

| high degree | 6U |

| GPUs | 1 HGX-Hopper-8 GPU module |

| processing unit | 2 AMD 5th Generation EPYCsTMProcessor, maximum cTDP 500W |

| random access memory (RAM) | 24 DDR5 DIMMs memory, rate support up to 6400MT/S |

| stockpile | Supports up to 24 2.5-inch SSD drives, including up to 16 NVMe drives |

| M.2 | 2 internal M.2 NVMe (optional) |

| PCIe slot | Supports 10 PCIe 5.0x16 slots (1 PCIe 5.0x16 slot can be replaced with 2 PCIe 5.0x8 rate x16 slots) Optional support for Bluefield-3, CX7, and many types of smart NICs |

| RAID Support | Optional support for RAID 0/1/10/5/50/6/60, etc., and Cache supercapacitor protection. |

| Front I/O | 1 USB 3.0 port, 1 USB 2.0 port, 1 VGA port |

| Rear I/O | 2 USB 3.0 ports, 1 MicroUSB port, 1 VGA port, 1 RJ45 management port |

| OCP | Optional support for 1 OCP 3.0 network card, support for NCSI function |

| managerial | DC-SCM BMC Management Module |

| TPM module | TPM 2.0 |

| Fan Module | GPU area: 6 x 54V N+1 redundant hot-swappable fans CPU area: 6 x 12V N+1 redundant hot-swappable fans |

| power supply | Two 12V 3200W and six 54V 2700W titanium CRPS power supplies, supporting N+N redundancy |

| sizes | Width 447mm, Height 263mm, Depth 860mm |

| weights | Net weight: 92kg (Gross weight: 107kg) |

| Environmental parameters | Working temperature: 10℃~35℃; Storage temperature: -40℃~70℃. |

| Operating Humidity: 10%~90% R.H.; Storage Humidity: 10%~93% R.H. |